The State of Vue.js Report 2025 is now available! Case studies, key trends and community insights.

Table of Contents

- Flutter Project Structure with Codestral: LLM-Assisted Code Generation

- From English Prompts to Flutter Application Source Code

- How to Break Down App Ideas for Better LLM Outputs

- Generate the Perfect App Name with LLMs

- Planning and Executing App Implementation with LLMs

- The Demo

- LLM-Driven Code: Turning Clear Prompts into Scalable Apps

Large Language Models (LLMs) are rapidly transforming how developers build applications, but tapping into their full potential requires more than just generic instructions. When it comes to generating code, especially for frameworks like Flutter, understanding how to craft precise prompts can be the difference between a functioning prototype and a tangled mess of unscalable code. This article explores the step-by-step process of using LLMs to create a well-structured Flutter app scaffold that aligns with your project’s unique requirements.

LLMs might occasionally hallucinate, making factual prompts pointless, but they are great at exploring correlations between words, even in different languages. Training on code samples, Mistral’s Codestral model lets users translate between quite unexpected languages, e.g., English, to programming language.

In this article, we’ll explore how Codestral can be utilized to generate Flutter application scaffolds that accommodate a user’s English-based app idea without needing an immediate refactor before a human developer can expand on its functionality. Learn how the compact yet powerful Codestral model, paired with the expertise embedded in the Flutter CLI, can revolutionize your app development process.

Flutter Project Structure with Codestral: LLM-Assisted Code Generation

General-purpose LLMs like Mistral’s Large 2, OpenAI’s GPT-4, etc., generate code solutions in a single file. While this approach significantly increases the chance of the resulting code working, it also makes the code not a great foundation to build upon before any functionality expansion.

Codestral, on the other hand, is a specialized model, as it was trained on code samples specifically. This makes the model surprisingly good at splitting implementation into logical file structures and then importing it into the main project file. All that will be presented in a bit via proper prompts, of course.

While Codestral can present a valid Flutter project structure, which you can test on your own with, e.g., a “Present a default Flutter project structure in a text tree view” prompt, why not support the process with a “classical” approach, by calling flutter create … command? This ensures that the structure is actually valid, e.g., the pubspec.yaml is filled with relevant package imports. Codestral will come in handy to describe the files necessary to implement the app’s basic navigation and their initial implementation.

This kind of LLM hand-off is the main idea of this write-up – why risk hallucinating on the foundation level while we have other tools that guarantee correctness? There are some steps that traditional tools aren’t able to accomplish, and then we’ll prompt Codestral (or Ministral or Large 2) to help. It’ll be both cheaper and easier to manage the creation process.

From English Prompts to Flutter Application Source Code

Transforming a simple idea into a functional Flutter application starts with a well-crafted prompt. By breaking down the app's purpose and key features into concise, structured descriptions, we create the foundation for generating executable Dart code.

Our plan is to:

Obtain a prompt from the user

Generate and run a matching Flutter application

To make the prompting process manageable, we’d expect the prompt to follow this structure loosely: a One-sentence explanation of the application’s purpose, followed by features critical to the user.Example prompts:

“Video streaming app. Features: recommended videos, following feed, video upload page, account settings.”

“Chat app. Features: list contacts, user stories browser, account settings.”

Warning! Extracting the app idea into such a concise description might be the hardest step in the whole process; you might want to contact professionals to help you with that 😉

To convert the app idea into an executable Dart code, we’ll use the following pipeline:

Elaborate and normalize the app idea’s structure

Find an app name for the ideated project

Plan and write down implementation

Every step will be managed by a separate model – it could be any capable model on the market, but we’ll focus on Mistral’s models, namely Large 2, Ministral, and Codestral.

How to Break Down App Ideas for Better LLM Outputs

The more information we give in the initial prompt, the more precise the output of LLM can be. We established that our user prompt is short to make the prompt focused on the goal, but now we have to elaborate on it for better results. What should we do now? There’s a lot to unpack from, e.g., a “following feed” feature. The hidden meaning is clear to tech-savvy individuals, so let’s employ one to explain it for us: Large 2 will be a perfect fit for that job.

The prompt we give to the model is simple:

“You are a software architect planning a route-based Flutter application. Summarize the following app idea using technical language. Each feature explanation and purpose have to be comma-separated. Summarize only the features; do not provide any guidance. Be concise.”

To illustrate the expected output, an elaborated video streaming app idea results in:

“App Name: FlutterFlow Video Streaming AppFeatures:- Recommended Videos Screen, implements a personalized video suggestion algorithm, purpose: enhance user engagement, discover new content,- Following Feed Screen, displays content from subscribed channels, purpose: curate personalized feed, keep users updated on followed creators, - Video Upload Page facilitates user-generated content, purpose: encourage user engagement, build content library, - The account Settings Page manages user profiles, preferences, and purpose: to customize the user experience and handle privacy and notifications.”

This gives us an optimal amount of context for each feature and clearly separates them.

Generate the Perfect App Name with LLMs

To write code into files, we first need to create a Flutter project. To call Flutter Create, we need to provide a project name, though, which we don’t have. A generic name would work, but it would also be stuck in the implementation, rendering the project unusable from the get-go. Happily, we can use LLM for that purpose, too! For this task, I’ve picked Ministral 8B.

With the prompt:

“Propose a name for the app. Respond just with the name, no explanation.”

The model is able to deliver a meaningful name for the app. Flutter CLI command requires a snake_case name though, which we ensure simply by utilizing the change_case Dart package to avoid making the prompt longer and overconstrained.

Planning and Executing App Implementation with LLMs

Now, to the juicy part: implementation. Our goal is to obtain a well-structured codebase on which the development process can start right away without manual intervention. The goal (for now) is not to fully implement the app; rather, the app is a step further to our specific idea than a basic Flutter boilerplate project. The story of creating the prompt for this step is long and confusing, yet also specific enough for me not to see any value in explaining it step by step. However, here are the major considerations behind it:

There’s a lot of Flutter code around the web, some of which is deprecated. We had to include a few instructions for some widgets to prevent the LLM from using the outdated code.

It’s structured in an XMLish manner, making internal references possible and normalized.

The prompt includes an example of a feature implementation, making the outputs more focused. See Few-shot prompting (in this case, one example gives good results).

The unexpected and interesting element of the whole prompt is how much proper nomenclature carrying is used: In the prompt, we reference “routes” for features, which is a standard way of defining full-screen parts of a Flutter application. At one point in the experiment, I tried changing the “routes” references to “pages” – seemingly equal concepts. However, with “routes,” the generation was successful 90% of the time, while with “pages,” not a single one from 10 attempts resulted in a functioning or even correctly structured application. I suppose it still takes a developer to harness LLMs to create software.

The implementation prompt is:

“<objective> You are a Flutter expert developer. You're tasked with planning and implementing an application specified below. Plan the implementation in the following order:

1. Navigation setup - plan route names and assign widget names for those routes. Use BottomNavigationBar for in-app navigation;

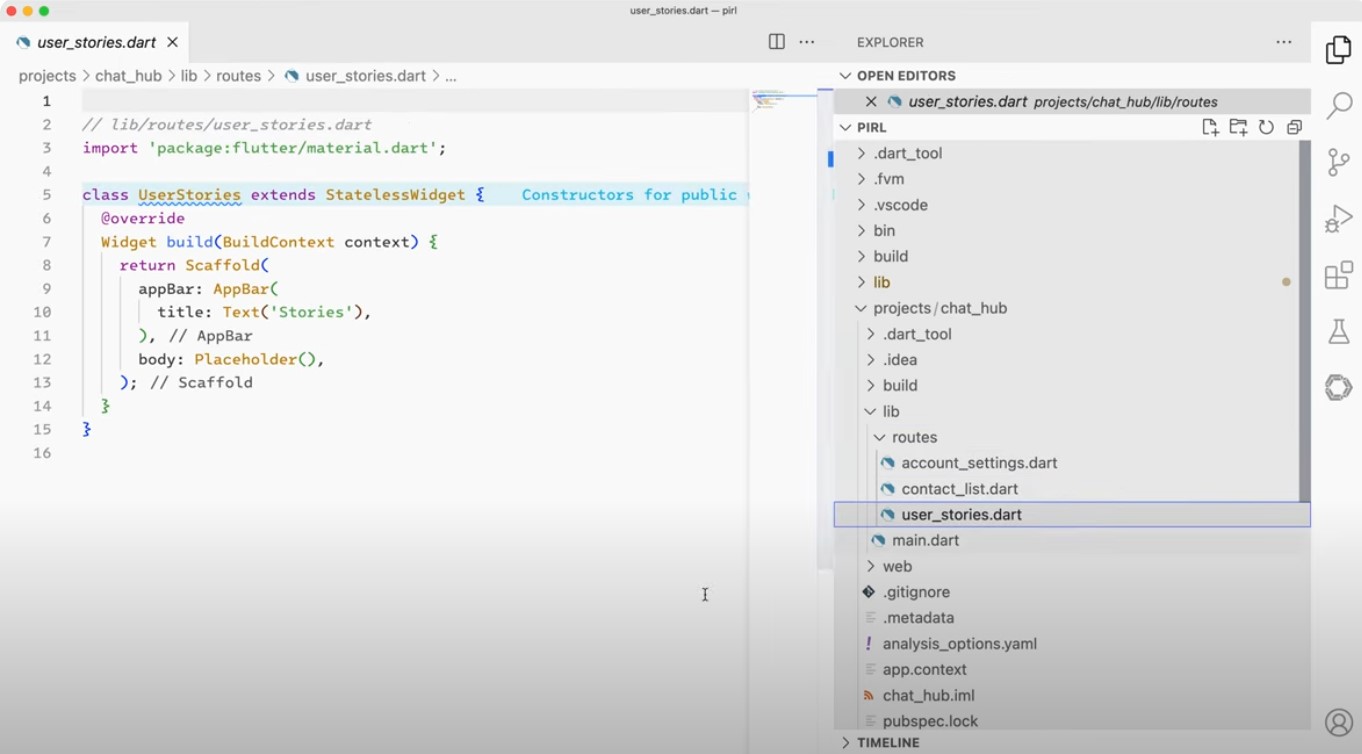

2. File structure - keep each route's page implementation in a separate file in the “routes” subdirectory;

3. Page widget implementation - fill each page route widget with a Scaffold and an AppBar;

</objective>

<assignment> Wait for the user's app specification and plan the implementation for it following <rules/> and remembering the <objective/> </assignment>

<rules>

- Respond only with code and respective full file paths as the first comment line.

- Avoid the const keyword.- Be sure to use label instead of title for BottomNavigationBarItem widget.

- Don't set custom selectedItemColor for BottomNavigationBarItem.

- Be sure to set the type field to BottomNavigationBarType.fixed on BottomNavigationBar.

- Use Placeholder() widgets as the contents of each route.

- Use relative imports- main.dart should contain the BottomNavigationBar that links to other sub-routes.

</rules>

<example>

```dart

// feature.dart

import 'package:flutter/material.dart';

class Feature extends StatelessWidget {

@override Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text('Feature name'),

),

body: Placeholder(),

);

}

}

```

</example>”The output contains separated blocks of code with a comment that describes the target file path in the project’s lib/ directory.

The Demo

As you may expect, the step-by-step process described above is easily automatable. Not yet released is our tool to do just that from the comfort of your CLI; see it in action below:

In the demo, you can see the user prompt being passed into the pipeline described above and the resulting code being run.

LLM-Driven Code: Turning Clear Prompts into Scalable Apps

There’s a lot of potential in English-to-code pipelines; however, it concerns technical English prompts being translated into valid code. In such scenarios, one word in the original prompt being imprecise can make or break the whole generation process—picking the right words still looks like a job for a programmer, but the programmer doesn’t have to be slowed down by implementation details.

The synergy between “classical” CLI tools being driven by LLMs outputs is strong, as the code generated by the LLM has to be valid. Still, not necessarily perfect, as e.g. best practices can be automatically applied by accompanying linter and/or code formatter after the generation is complete, allowing the prompts to be focused on the business value rather than being bugged down into semantics or optimizations being applied.

:quality(90))

:quality(90))